Why Free Speech Isn’t An Excuse

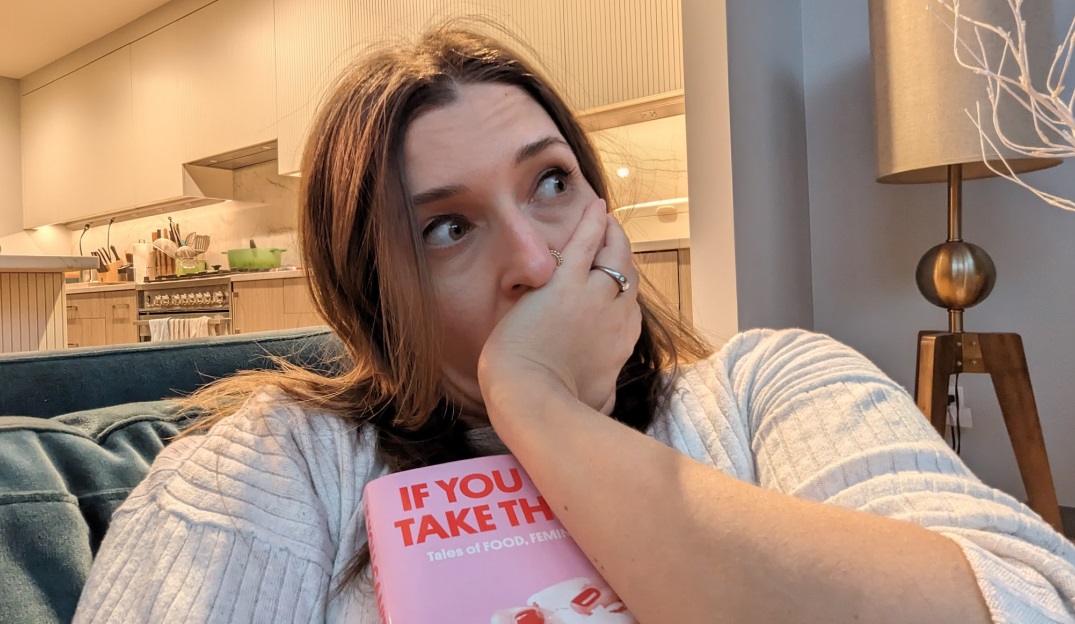

As my online persona has grown over the last decade and a half, so has the volume of hate I have received. I have received letters at my home, had politicians come for me, and received every threat you can imagine, including one individual who told me that I was “too ugly to rape.” (Please, tell me, what is the corollary to this statement?)

After the Batali piece came out, my Twitter account was hacked, and I decided I needed to approach the issue from the distance of academic detachment, lest it overwhelm me entirely. I started researching the nature of online hate. I poured through academic journals. I tried talking to some of the people who sent me hateful comments (something I don’t recommend). I cried in my car a few times at the sheer terror of engaging with people who said they wanted me dead. I asked one guy why he bothered following me, and he replied that he needed to remember “that people like me existed.” Even though, in his opinion, I shouldn’t.

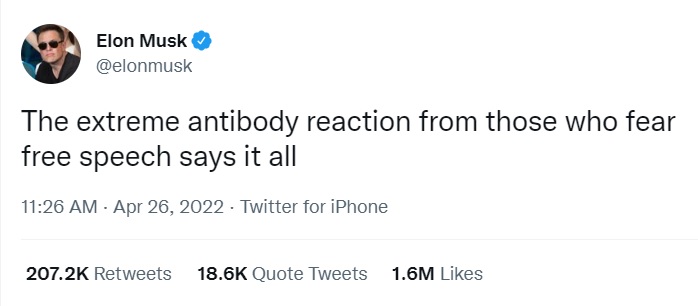

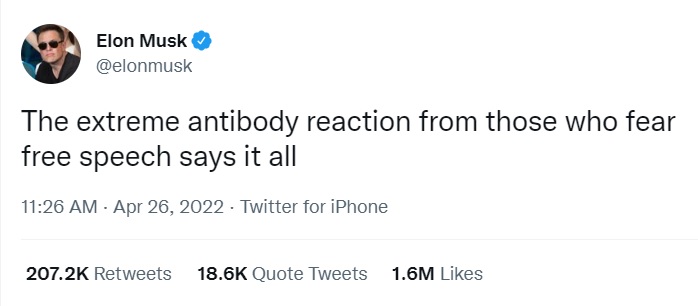

I ended up giving several talks on the subject of online abuse, the ways people try to defend it, and what we can do to combat it. One of the main excuses for why toxic speech should be allowed on social media platforms is that the company allowing it is trying to preserve “free speech”. This is what Elon Musk, who recently bought Twitter for an amount that is roughly Bolivia’s GDP, claims he wants to prioritize when he says things like this:

Musk has said as long as it’s legal, he’s going to allow it.

Let’s be clear: people who are afraid right now aren’t scared of free speech, they’re scared of abusive speech, on a platform that they use both professionally and personally. There are several problems with Musk and his defenders labeling themselves as “champions of free speech” as they create the potential for Twitter to become even more toxic that it is.

The first and foremost is this: Twitter is non-governmental entity with no obligation to uphold free speech. It’s just an excuse to allow for more toxic discourse (which platforms often try to optimize for, as it increases engagement).

The first six words of the first amendment are as follows: “Congress shall make no law …” Which means that the only entity that has an obligation to uphold the first amendment is the government – they can’t legally stop you from exercising that right. But companies, websites, publications, and individuals get to set their own rules for what they’ll tolerate. If someone wants to write on your blog their love of Nic Cage, you can freely tell them no. You aren’t violating their free speech, because their freedom of speech was never guaranteed on your blog. They are entirely free to go say it elsewhere.

Twitter is an American company, but it operates internationally – users are all over world. As such, Twitter functions somewhat differently in all those countries, according to their specific laws. For example, if you are using Twitter in Germany, where hate speech is outlawed, you can’t tweet hateful comments without risking having your account banned. They’ve cracked down so much so that some users have changed their location to “Germany” to avoid online abuse. So Twitter can and does take action (technically already violating U.S. free speech laws) when the local laws demand it in order to avoid fines and financial penalties.

They’ll take a stand against abuse when it serves their needs – but they won’t do it for their users.

The second issue is that much of the hate speech we’re talking about doesn’t fall under the first amendment. If speech threatens violence or harm, or it’s libelous, it’s not protected speech – and it can and should be removed from a platform. Musk says that he’ll allow any speech that is legal, but Twitter already allows a lot of illegal speech (and Musk’s talking about fewer restrictions, not more). Most of us have probably already reported a death or bodily threat and received Twitter’s automated reply that it did “not violate their standards.” And there’s tons of speech that falls into a grey area – it’s not quite illegal, but it is libelous. So Musk’s criteria is utterly meaningless.

Under Musk’s rules, all of these comments would be allowed.

Thirdly, allowing hate and toxicity on a platform actually causes more damage to free speech. If Musk – and those like him – really cared about free speech, then they’d realize that the best way to preserve that is to protect their users. Studies show that the groups that receive the most online hate are traditionally underrepresented and marginalized groups – people of color, and those in the LGBTQIA communities, who are more likely to self-censor because they fear online abuse. And those are the groups who are most likely to leave platforms when action isn’t taken against their abusers. The resulting community that is left on the platform risks becoming a hateful monoculture. This is already happening now with Twitter, as users leave the platform in droves.

And that’s the biggest problem with this line of thinking: once you allow toxicity and abuse to flourish under the protection of the first amendment, you actually create less free speech overall.

There are proven ways to stop the toxicity on Twitter. Deplatforming abusers (banning them permanently) has been empirically proven to work. It removes the offender, protects the abused, and lets other users know that abuse will not be tolerated. Establishing community guidelines and holding users to them is also incredibly effective. But in order to do that, a company’s leader needs to care about making it a better place. And that doesn’t seem to be Musk’s goal.